Hi Everyone,

I am confused with Probability of error and Probability of bit error which I want to compute BER.

Thing Probability of bit error is from 0 to 0.5 range so this can be expressed as BER or probability of error is BER.

I am confused in this part because my simulation in MATLAB simulate Probability of bit error.

As probability of error is from 0 to 1.

Can you please clarify your problem little more?

Probability of error, be it bit error or symbol error should range from 0 to 1.

Bit error.

The thing is the probability of bit error is from 0 to 0.5. And some IEEE papers mentioned that BER can be expressed as probability of bit error.

I know probability of error is from 0 to 1.

Which one of them is BER? Probability of error or probability of bit error?

BER - Bit Error Rate, if out of 100 bits 40 are in error, then BER is 0.4, does this clarifies for you?

BER is actually Bit Error Rate.

Yes.

So, this means its probability of error not of bit error then.

Most of IEEE papers mentioned this part.

Which is confusing me.

Probability of error is a general term, probability of bit error is more specific when we are dealing with bit errors.

So which one is correct to use for simulation?

Because it simulate with low SNR at 0.4100.

Around.

So, this is probability of bit error.

Probability of bit error, it should be.

As BER?

If that so, then it is from 0 to 0.5.

I didn’t got this, what’s the source for this?

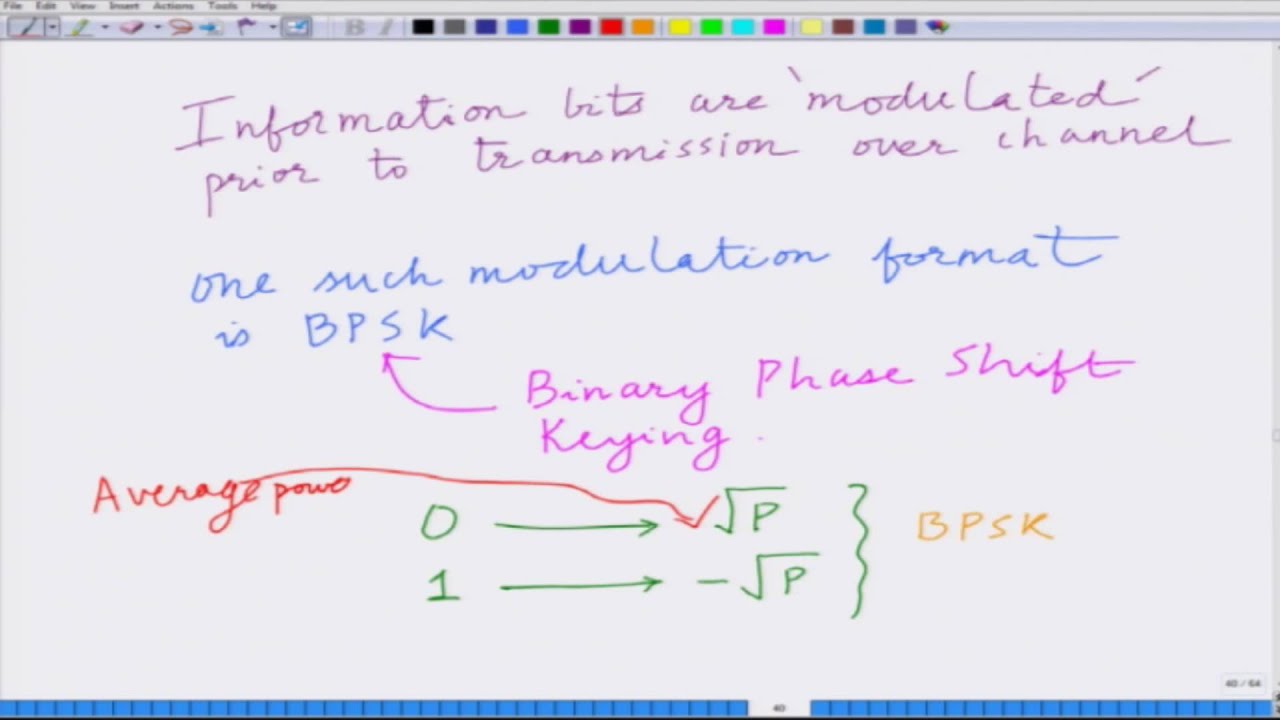

As the number system is binary here , so we have either 0 or 1. For our communication system we mostly assume it as BSC(Binary Symmetric Channel) which means probability of transmitting 0 and 1 is equal and equal to 0.5 (=1/2).

Assume we have to transmit 10(ten) bits all zeros and assume probability of bit error is 0.7, that means I will receive 7 1s and 3 zeros.

In that case , on the receiver we can decide to decode ones as zeros and zeros as ones, that way I will receive 7 zeros and 3 ones and in that case probability of bit error is 0.3.

So this is how probability of bit error is 0 to 0.5.

Just tried to use shorter explanation to convey the idea.

This is a very useful and helpful information.

Thanks a lot @pulkit !

I was confused, so, this how is BER does.

In telecom ber goes from 0 to 1 not from 0 to 0.5 same for bler.

All 10 bits in your explanation are wrongly decoded so your ber is 1 not 0.3.

This description is for a general communication system where receiver knows about the nature of the channel.

But all bits could be wrongly decoded so ber goes up to 1 not up to 0.5.

Can you please explain why all bits are wrongly decoded?

Your explanation is just a particular case.

It does not cover the whole possibilities.

So that’s where the probabilistic nature comes into picture, probability of error = 0.7 tells that out of 10, 7 are in error, isn’t it?

SINR in 5G ranges from -23 dB to 40 dB.

You will never convince me that at SINR = -20 dB your ber could not go higher than 0.5.